- Pro

In our exclusive interview, Phison CEO Pua Khein Seng also told us where AI actually makes its money

When you purchase through links on our site, we may earn an affiliate commission. Here’s how it works.

(Image credit: ASEAN-China AI/YouTube)

Share

Share by:

(Image credit: ASEAN-China AI/YouTube)

Share

Share by:

- Copy link

- X

- Threads

The technology industry is increasingly talking about GPUs being central to AI infrastructure, but the limiting factor whichdecides what models you can run is actually memory.

In a wide-ranging interview, Phison CEO Pua Khein Seng, who invented the world's first single-chip USB flash drive, told TechRadar Pro the focus on compute has distracted from a more basic constraint which shows up everywhere, from laptops running local inference to hyperscalers building AI data centers.

“In AI models, the real bottleneck isn’t computing power - it’s memory,” Pua said. “If you don’t have enough memory, the system crashes.”

You may like-

Guess where all the NAND flash components are going? The 8th (yes, eighth) 245TB SSD has been announced amidst race to quench AI storage thirst of hyperscalers

Guess where all the NAND flash components are going? The 8th (yes, eighth) 245TB SSD has been announced amidst race to quench AI storage thirst of hyperscalers

-

How AI, digital sovereignty and data localization are reshaping European data strategies

How AI, digital sovereignty and data localization are reshaping European data strategies

-

Why the flash crisis will last much longer this time

Why the flash crisis will last much longer this time

Compensating for DRAM limits

This something is what's behind Phison’s aiDAPTIV+ work, which the company discussed publicly at CES 2026, and essentially is a way to extend AI processing to integrated GPU systems by using NAND flash as a memory pool.

Pua describes it as using SSD capacity to compensate for DRAM limits and keep GPUs focused on compute instead of waiting on memory.

“Our invention uses SSDs as a complement to DRAM memory,” he says. “We use this as memory expansion.”

A practical goal is improving responsiveness during inference, especially Time to First Token, the delay between submitting a prompt and seeing the first output. Pua argues long TTFT makes local AI feel broken, even when the model eventually completes the task.

Are you a pro? Subscribe to our newsletterContact me with news and offers from other Future brandsReceive email from us on behalf of our trusted partners or sponsorsBy submitting your information you agree to the Terms & Conditions and Privacy Policy and are aged 16 or over.“If you ask your device something and have to wait 60 seconds for the first word, would you wait?” he says. “When I ask something, I can wait two seconds. But if it takes 10 seconds, users will think it’s garbage.”

Pua links TTFT improvements to better reuse of memory-heavy inference data, particularly KV cache, comparing it to a doctor repeating the same instructions to every patient because nothing is saved between visits.

“In AI inference, there’s something called KV cache - it’s like cookies in web browsing,” he expanded. “Most systems don’t have enough DRAM, so every time you ask the same question, it has to recompute everything.”

You may like-

Guess where all the NAND flash components are going? The 8th (yes, eighth) 245TB SSD has been announced amidst race to quench AI storage thirst of hyperscalers

Guess where all the NAND flash components are going? The 8th (yes, eighth) 245TB SSD has been announced amidst race to quench AI storage thirst of hyperscalers

-

How AI, digital sovereignty and data localization are reshaping European data strategies

How AI, digital sovereignty and data localization are reshaping European data strategies

-

Why the flash crisis will last much longer this time

Why the flash crisis will last much longer this time

Phison’s approach, Pua added, is to “store frequently used cache in the storage” so the system can retrieve it quickly when a user repeats or revisits a query.

That memory-first framing extends beyond laptops into how companies build GPU servers, as Pua notes many organizations buy extra GPUs not for compute throughput, but to collect more VRAM, which leads to wasted silicon.

“Without our solution, people buy multiple GPU cards primarily to aggregate memory, not for compute power,” he adds. “Most of those expensive GPUs end up idle because they’re just being used for their memory.”

If SSDs can provide a larger memory pool, Pua says, GPUs can be bought and scaled for compute instead. “Once you have enough memory, then you can focus on compute speed,” he notes, “if one GPU is slow, you can add two, four, or eight GPUs to improve computing power.”

244TB SSDs

From there, Pua widened the lens to the economics of hyperscalers and AI infrastructure, describing the current wave of GPU spending as necessary but incomplete, because the business case for AI depends on inference, and inference depends on data storage.

“CSPs have invested over $200 billion in GPUs,” he says. “They’re not making money directly from GPUs. The revenue comes from inference, which requires massive data storage.”

He summarized the situation with a line he returned to repeatedly: “CSP profit equals storage capacity.”

That argument also feeds into Phison’s push toward extreme-capacity enterprise SSDs. The company has announced a 244TB model, and Pua told us, "Our current 122TB drive uses our X2 controller with 16-layer NAND stacking. To reach 244TB, we simply need 32-layer stacking. The design is complete, but the challenge is manufacturing yield.”

He also outlined an interesting alternative route: higher-density NAND dies. “We’re waiting for 4Tb NAND dies, with those, we could achieve 244TB with just 16 layers,” he said, adding that timing would depend on manufacturing maturity.

On PLC NAND, Pua was clear Phison doesn’t control when it arrives, but he told us he intends to support it once manufacturers can ship it reliably.

“PLC is five-bit NAND, that’s primarily a NAND manufacturer decision, not ours,” he said. “When NAND companies mature their PLC technology, our SSD designs will be ready to support it.”

He was more skeptical about a different storage trend: tying flash directly into GPU-style memory stacks, sometimes discussed under labels like high-bandwidth flash. Pua argued the endurance mismatch creates a nasty failure mode.

“The challenge with integrating NAND directly with GPUs is the write cycle limitation,” he said. “NAND has finite program/erase cycles. If you integrate them, when the NAND reaches end-of-life, you have to discard the entire expensive GPU card.”

Phison’s preferred model is modular: “keeping SSDs as replaceable, plug-and-play components. When an SSD wears out, you simply replace it while keeping the expensive GPU.”

Taken together, Pua’s view of the AI hardware future is less about chasing ever-larger GPUs and more about building systems where memory capacity is cheap, scalable, and replaceable.

Whether the target is local inference on an integrated GPU or rack-scale inference in a hyperscaler, the company is betting that storage density and memory expansion will decide what’s practical long before another jump in compute does.

Follow TechRadar on Google News and add us as a preferred source to get our expert news, reviews, and opinion in your feeds. Make sure to click the Follow button!

And of course you can also follow TechRadar on TikTok for news, reviews, unboxings in video form, and get regular updates from us on WhatsApp too.

TOPICS AI Wayne WilliamsSocial Links NavigationEditor

Wayne WilliamsSocial Links NavigationEditorWayne Williams is a freelancer writing news for TechRadar Pro. He has been writing about computers, technology, and the web for 30 years. In that time he wrote for most of the UK’s PC magazines, and launched, edited and published a number of them too.

Show More CommentsYou must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Logout Read more Guess where all the NAND flash components are going? The 8th (yes, eighth) 245TB SSD has been announced amidst race to quench AI storage thirst of hyperscalers

Guess where all the NAND flash components are going? The 8th (yes, eighth) 245TB SSD has been announced amidst race to quench AI storage thirst of hyperscalers

How AI, digital sovereignty and data localization are reshaping European data strategies

How AI, digital sovereignty and data localization are reshaping European data strategies

Why the flash crisis will last much longer this time

Why the flash crisis will last much longer this time

Building your dream PC is about to take longer and cost more - shortages see some shops now limiting SSD, HDD, and RAM purchases to prevent hoarding

Building your dream PC is about to take longer and cost more - shortages see some shops now limiting SSD, HDD, and RAM purchases to prevent hoarding

Seagate's new storage looks to cover everything you'll need for the age of AI - including up to 3.2 petabytes in a single enclosure

Seagate's new storage looks to cover everything you'll need for the age of AI - including up to 3.2 petabytes in a single enclosure

10 storage technologies that want to replace hard drives

Latest in Pro

10 storage technologies that want to replace hard drives

Latest in Pro

ServiceNow patches critical security flaw which could allow user impersonation

ServiceNow patches critical security flaw which could allow user impersonation

Python libraries used in top AI and ML tools hacked - Nvidia, Salesforce and other libraries all at risk

Python libraries used in top AI and ML tools hacked - Nvidia, Salesforce and other libraries all at risk

Hackers hijack LinkedIn comments to spread malware - here's what to look out for

Hackers hijack LinkedIn comments to spread malware - here's what to look out for

I’m a backup and recovery provider, but here’s why you shouldn’t just trust me

I’m a backup and recovery provider, but here’s why you shouldn’t just trust me

"We will set a high bar" - Microsoft reveals multiple new data centers, and promises your energy bills won't go up to pay for them

"We will set a high bar" - Microsoft reveals multiple new data centers, and promises your energy bills won't go up to pay for them

Experts warn this new Chinese Linux malware could be preparing something seriously worrying

Latest in Features

Experts warn this new Chinese Linux malware could be preparing something seriously worrying

Latest in Features

The RAM shortage claims another victim as PS5 SSD prices rocket — here's why now is the worst time to buy and what to do instead

The RAM shortage claims another victim as PS5 SSD prices rocket — here's why now is the worst time to buy and what to do instead

LG C6 OLED – everything we know about one of 2026's most anticipated TVs

LG C6 OLED – everything we know about one of 2026's most anticipated TVs

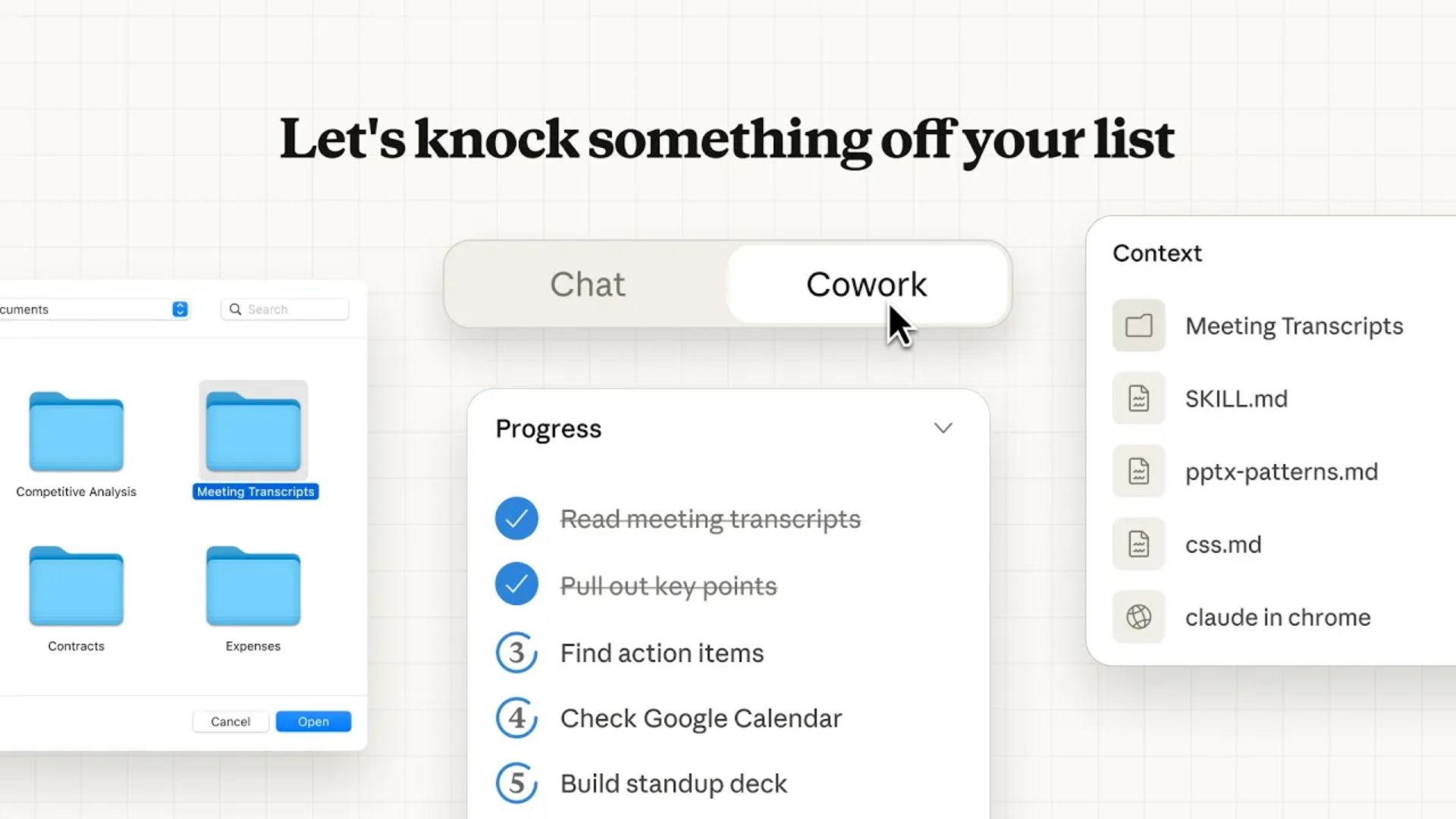

5 ways Claude's Cowork could be the biggest AI innovation of 2026

5 ways Claude's Cowork could be the biggest AI innovation of 2026

The Ikko MindOne Pro is like a Galaxy Z Flip 7 without the flip

The Ikko MindOne Pro is like a Galaxy Z Flip 7 without the flip

Music fans are demanding Spotify flags AI-generated music recommendations

Music fans are demanding Spotify flags AI-generated music recommendations

I heard Victrola's 'soundbase for turntables' speaker, and it might work

LATEST ARTICLES

I heard Victrola's 'soundbase for turntables' speaker, and it might work

LATEST ARTICLES- 16 popular Apple apps now need a subscription to get every new feature

- 2Is Kat Dennings in Avengers: Doomsday? Even she's not sure

- 3Hackers hijack LinkedIn comments to spread malware - here's what to look out for

- 4LG C6 OLED – everything we know about one of 2026's most anticipated TVs

- 5Prime Video releases violent first trailer for new pirate movie The Bluff