- AI Platforms & Assistants

- OpenAI

- ChatGPT

Watch out for when AI drifts from reality

When you purchase through links on our site, we may earn an affiliate commission. Here’s how it works.

(Image credit: Shutterstock)

Share

Share by:

(Image credit: Shutterstock)

Share

Share by:

- Copy link

- X

- Threads

Hallucinations are an intrinsic flaw in AI chatbots. When ChatGPT, Gemini, Copilot, or other AI models deliver wrong information, no matter how confidently, that's a hallucination. The AI might hallucinate a slight deviation, an innocuous-seeming slip‑up, or commit to an outright libelous and entirely fabricated accusation. Regardless, they are inevitably going to appear if you engage with ChatGPT or its rivals for long enough.

Understanding how and why ChatGPT can trip over the difference between plausible and true is crucial for anyone who wants to talk to the AI. Because these systems generate responses by predicting what text should come next based on patterns in training data rather than verifying against a ground truth, they can sound convincingly real while being completely made up. The trick is to be aware that a hallucination might appear at any moment, and to look for clues that one is hiding in front of you. Here are some of the best indicators that ChatGPT is hallucinating.

Strange specificity without verifiable sources

One of the most annoying things about AI hallucinations is that they often include seemingly specific details. A fabricated response can mention dates, names, and other particulars that make it feel credible. Because ChatGPT generates text that looks like patterns it learned during training, it can create details that fit the structure of a valid answer without ever pointing to a real source.

You may like-

We're entering a new age of AI moderation, but it may be too late to rein in the chatbot beast

We're entering a new age of AI moderation, but it may be too late to rein in the chatbot beast

-

Think you can trust ChatGPT and Gemini to give you the news? Here's why you might want to think again

Think you can trust ChatGPT and Gemini to give you the news? Here's why you might want to think again

-

Prompt styles to get the most out of ChatGPT 5.2

Prompt styles to get the most out of ChatGPT 5.2

You might ask a question about someone and see real bits of personal information about the individual mixed with a completely fabricated narrative. This kind of specificity makes the hallucination harder to catch because humans are wired to trust detailed statements.

Nonetheless, it's crucial to verify any of those details that might cause problems for you if you're wrong. If a date, article, or person mentioned doesn’t show up elsewhere, that’s a sign you might be dealing with a hallucination. Keep in mind that generative AI doesn’t have a built‑in fact‑checking mechanism; it simply predicts what might be plausible, not what is true.

Unearned confidence

Related to the specificity trap is the overconfident tone of many an AI hallucination. ChatGPT and similar models are designed to present responses in a fluent, authoritative tone. That confidence can make misinformation feel trustworthy even when the underlying claim is baseless.

AI models are optimized to predict likely sequences of words. Even when the AI should be cautious about what it writes, it will present the information with the same assurance as correct data. Unlike a human expert who might hedge or say “I’m not sure,” it's still unusual, though more common recently, for an AI model to say "I don't know. That's because a full‑blown answer rewards the appearance of completeness over honesty about uncertainty.

Get daily insight, inspiration and deals in your inboxContact me with news and offers from other Future brandsReceive email from us on behalf of our trusted partners or sponsorsBy submitting your information you agree to the Terms & Conditions and Privacy Policy and are aged 16 or over.In any area where experts themselves express uncertainty, you should expect a trustworthy system to reflect that. For instance, science and medicine often contain debates or evolving theories where definitive answers are elusive. If ChatGPT responds with a categorical statement on such topics, declaring a single cause or universally accepted fact, this confidence might actually signal hallucination because the model is filling a knowledge gap with an invented narrative rather than pointing out areas of contention.

Untraceable citations

Citations and references are a great way to confirm if something ChatGPT says is true. But sometimes it will provide what look like legitimate references, except those sources don’t actually exist.

This kind of hallucination is particularly problematic in academic or professional contexts. A student might build a literature review on the basis of bogus citations that look impeccably formatted, complete with plausible journal names. Then it turns out that the work rests on a foundation of references that cannot be traced back to verifiable publications.

You may like-

We're entering a new age of AI moderation, but it may be too late to rein in the chatbot beast

We're entering a new age of AI moderation, but it may be too late to rein in the chatbot beast

-

Think you can trust ChatGPT and Gemini to give you the news? Here's why you might want to think again

Think you can trust ChatGPT and Gemini to give you the news? Here's why you might want to think again

-

Prompt styles to get the most out of ChatGPT 5.2

Prompt styles to get the most out of ChatGPT 5.2

Always check whether a cited paper, author, or journal can be found in reputable academic databases or through a direct web search. If the name seems oddly specific but yields no search results, it may well be a “ghost citation” crafted by the model to make its answer sound authoritative.

Contradictory follow-ups

Confidently asserted statements with real references are great, but if ChatGPT contradicts itself, something may still be off. That's why follow-up questions are useful. Because generative AI does not have a built‑in fact database it consults for consistency, it can contradict itself when probed further. This often manifests when you ask a follow‑up question that zeroes in on an earlier assertion. If the newer answer diverges from the first in a way that cannot be reconciled, one or both responses are likely hallucinatory.

Happily, you don't need to look beyond the conversation to spot this indicator. If the model cannot maintain consistent answers to logically related questions within the same conversation thread, the original answer likely lacked a factual basis in the first place.

Nonsense logic

Even if the internal logic doesn't contradict itself, ChatGPT's logic can seem off. If an answer is inconsistent with real‑world constraints, take note. ChatGPT writes text by predicting word sequences, not by applying actual logic, so what seems rational in a sentence might collapse when considered in the real world.

Usually, it starts with false premises. For example, an AI might suggest adding non‑existent steps to a well‑established scientific protocol, or basic common sense. Like, as happened with Gemini, an AI model suggests using glue in pizza sauce so cheese would stick better. Sure, it might stick better, but as culinary instructions go, it's not exactly haute cuisine.

Hallucinations in ChatGPT and similar language models are a byproduct of how these systems are trained. Therefore, hallucinations are likely to persist as long as AI is built on predicting words.

The trick for users is learning when to trust the output and when to verify it. Spotting a hallucination is increasingly a core digital literacy skill. As AI becomes more widely used, logic and common sense are going to be crucial. The best defense is not blind trust but informed scrutiny.

Follow TechRadar on Google News and add us as a preferred source to get our expert news, reviews, and opinion in your feeds. Make sure to click the Follow button!

And of course you can also follow TechRadar on TikTok for news, reviews, unboxings in video form, and get regular updates from us on WhatsApp too.

The best business laptops for all budgetsOur top picks, based on real-world testing and comparisons

The best business laptops for all budgetsOur top picks, based on real-world testing and comparisons➡️ Read our full guide to the best business laptops1. Best overall:Dell Precision 56902. Best on a budget:Acer Aspire 53. Best MacBook:Apple MacBook Pro 14-inch (M4)

TOPICS AI CATEGORIES ChatGPT OpenAI Eric Hal SchwartzSocial Links NavigationContributor

Eric Hal SchwartzSocial Links NavigationContributorEric Hal Schwartz is a freelance writer for TechRadar with more than 15 years of experience covering the intersection of the world and technology. For the last five years, he served as head writer for Voicebot.ai and was on the leading edge of reporting on generative AI and large language models. He's since become an expert on the products of generative AI models, such as OpenAI’s ChatGPT, Anthropic’s Claude, Google Gemini, and every other synthetic media tool. His experience runs the gamut of media, including print, digital, broadcast, and live events. Now, he's continuing to tell the stories people want and need to hear about the rapidly evolving AI space and its impact on their lives. Eric is based in New York City.

Show More CommentsYou must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Logout Read more We're entering a new age of AI moderation, but it may be too late to rein in the chatbot beast

We're entering a new age of AI moderation, but it may be too late to rein in the chatbot beast

Think you can trust ChatGPT and Gemini to give you the news? Here's why you might want to think again

Think you can trust ChatGPT and Gemini to give you the news? Here's why you might want to think again

Prompt styles to get the most out of ChatGPT 5.2

Prompt styles to get the most out of ChatGPT 5.2

Top 10 AI chatbot do's and don’ts to help you get the most out of ChatGPT, Gemini, and more

Top 10 AI chatbot do's and don’ts to help you get the most out of ChatGPT, Gemini, and more

Can top AI tools be bullied into malicious work? ChatGPT, Gemini, and more are put to the test, and the results are actually genuinely surprising

Can top AI tools be bullied into malicious work? ChatGPT, Gemini, and more are put to the test, and the results are actually genuinely surprising

Science fiction sold us “good” AI – now it’s shaping how we treat real AI

Latest in AI Platforms & Assistants

Science fiction sold us “good” AI – now it’s shaping how we treat real AI

Latest in AI Platforms & Assistants

‘It will refuse to produce anything illegal’: Elon Musk rails against Grok backlash, but UK Prime Minister says ‘we’re not going to back down’

‘It will refuse to produce anything illegal’: Elon Musk rails against Grok backlash, but UK Prime Minister says ‘we’re not going to back down’

Is Kat Dennings in Avengers: Doomsday? Even she's not sure

Is Kat Dennings in Avengers: Doomsday? Even she's not sure

We're definitely beta testing this technology": is Alexa+ really bad, or are our expectations for free services too high?

We're definitely beta testing this technology": is Alexa+ really bad, or are our expectations for free services too high?

Gemini gets its biggest upgrade yet 'Personal Intelligence' that uses your Gmail, Photos, Search and YouTube history - and it could be our first glimpse of the new Siri in iOS 27

Gemini gets its biggest upgrade yet 'Personal Intelligence' that uses your Gmail, Photos, Search and YouTube history - and it could be our first glimpse of the new Siri in iOS 27

Android Auto users still on Google Assistant are finding broken functions

Android Auto users still on Google Assistant are finding broken functions

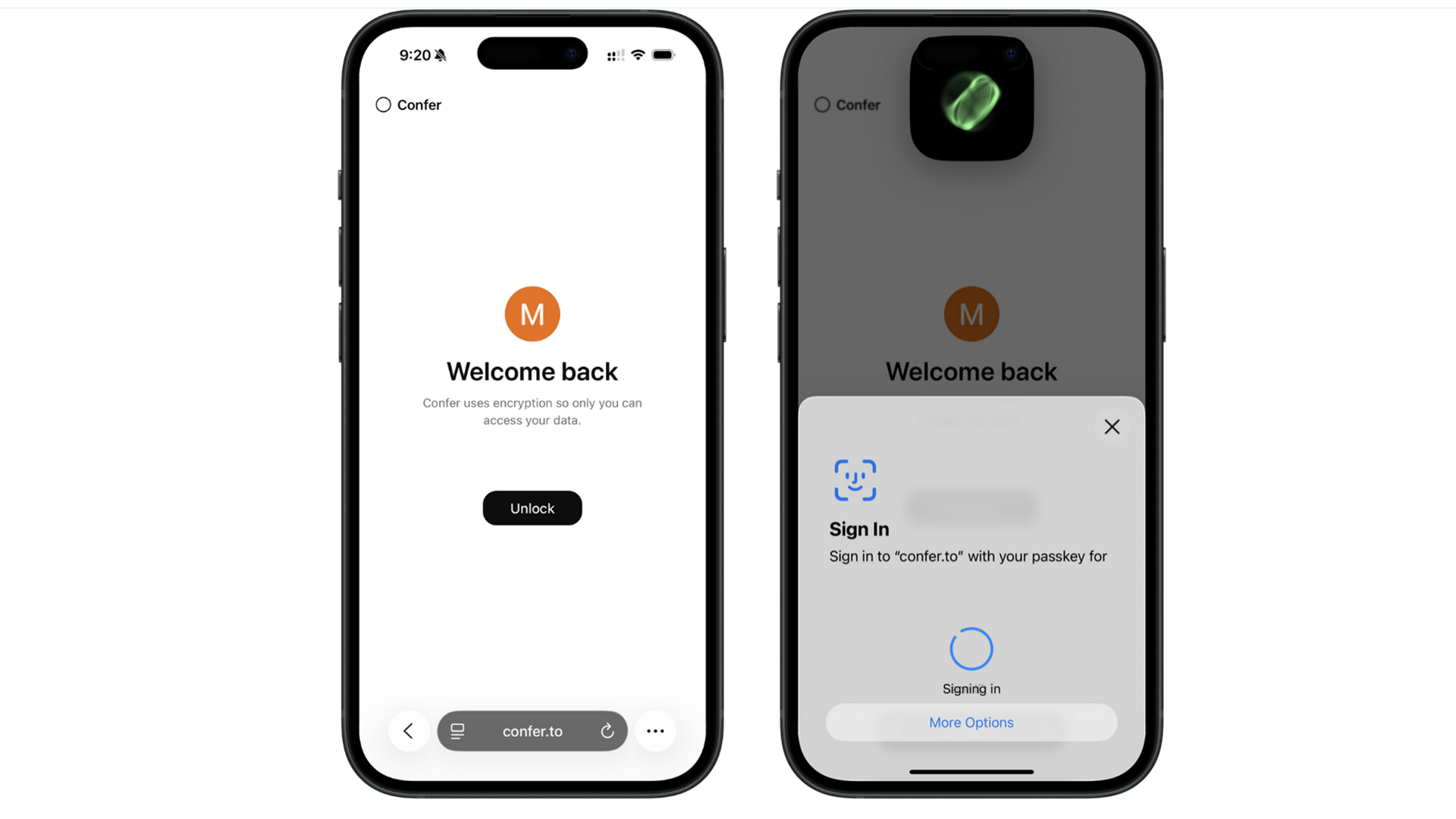

The creator of Signal has built an AI chatbot with the same privacy focus

Latest in Features

The creator of Signal has built an AI chatbot with the same privacy focus

Latest in Features

ChatGPT hallucinates, here's 5 ways to spot when it does

ChatGPT hallucinates, here's 5 ways to spot when it does

These PSVR 2 demos and game trials are must-plays if you recently picked up Sony's latest VR headset

These PSVR 2 demos and game trials are must-plays if you recently picked up Sony's latest VR headset

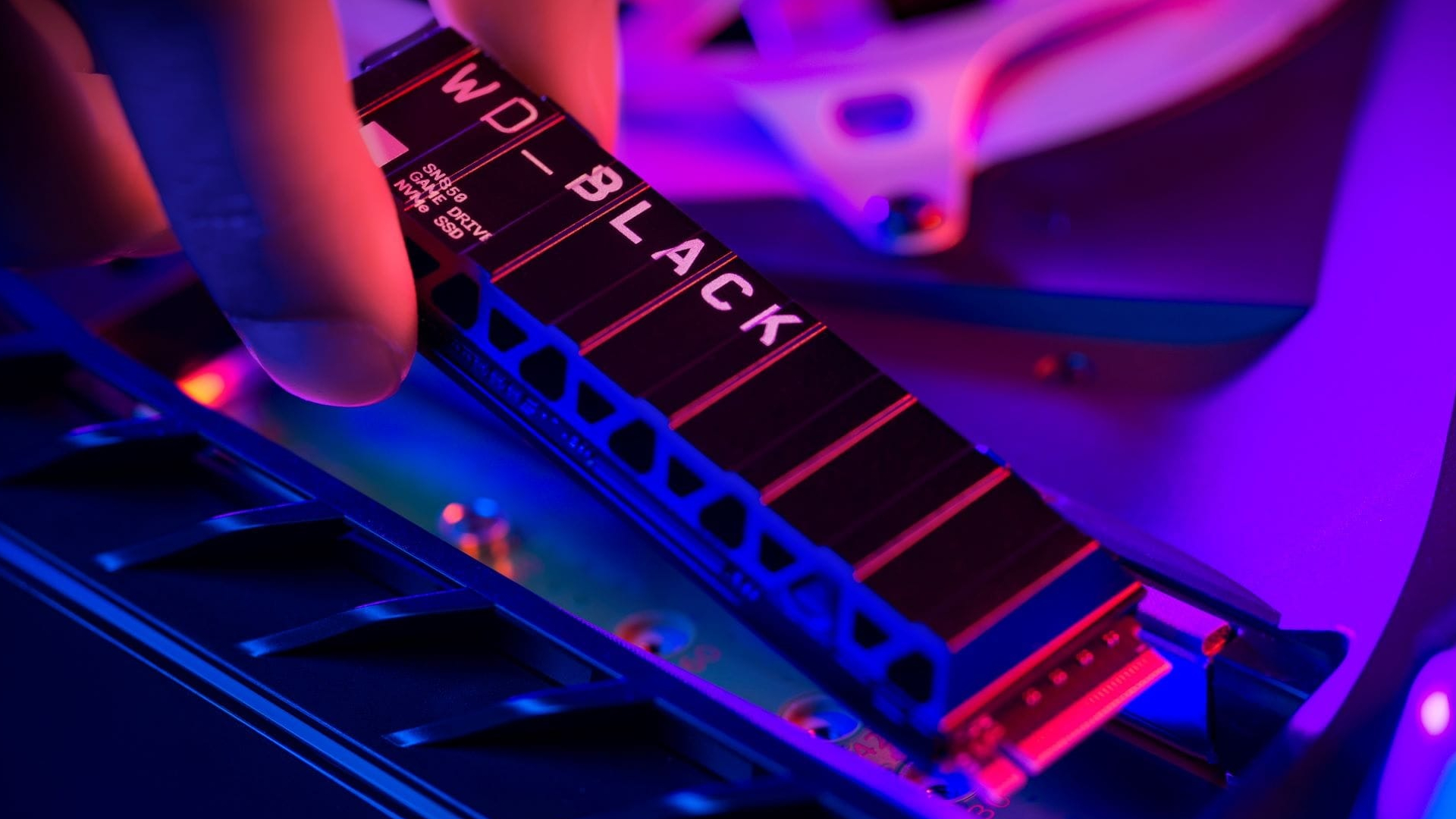

The RAM shortage claims another victim as PS5 SSD prices rocket — here's why now is the worst time to buy and what to do instead

The RAM shortage claims another victim as PS5 SSD prices rocket — here's why now is the worst time to buy and what to do instead

LG C6 OLED – everything we know about one of 2026's most anticipated TVs

LG C6 OLED – everything we know about one of 2026's most anticipated TVs

Exclusive: AMD head says 2026 will be a big year for AI PCs

Exclusive: AMD head says 2026 will be a big year for AI PCs

The Ikko MindOne Pro is like a Galaxy Z Flip 7 without the flip

LATEST ARTICLES

The Ikko MindOne Pro is like a Galaxy Z Flip 7 without the flip

LATEST ARTICLES- 1Meta just killed some of its best Quest 3 game studios — and convinced me to buy a Steam Frame instead of a Quest 4

- 2Micron says it's 'trying to help consumers' in RAM crisis despite killing its Crucial brand – and PC owners have got even angrier as a result

- 3More worrying tech supply chain news - no, it's not more RAM troubles, but this vital material could be set to cause issues sooner than expected

- 4Even AI skeptic Linus Torvalds is getting involved in 'vibe coding' - so could this herald a new dawn for Linux? Probably not...

- 5Companies confess their agentic AI goals aren't really working out - and a lack of trust could be why